One of the problems in process manufacturing is that processes tend to drift over time. When they do, we encounter production issues. Immediately, management wants to know, “what’s changed, and how do we fix it?” Anomaly detection systems can help us provide some quick answers.

When a manufacturing process deviates from its expected range, there are several problems that arise. The plant experiences production issues, quality issues, environmental issues, cost issues, or safety issues.

One or more of these issues will present itself, and the question from management is always, “what changed?” Of course, they’d really like to know exactly what to do to go and fix it, but fundamentally, we need to know what changed to put us in this situation.

Usually the culprit is either the physical equipment – maybe maintenance that’s been performed recently that threw things off – or it’s in the way we’re operating the equipment.

From a process engineer or a process operator’s perspective, we need to quickly identify what changed. We’re possibly in a situation where the plant is losing money every minute we’re operating like this, so operators, engineers, supervisors… everyone is under pressure to fix the problem as soon as possible.

In order to do this, we need to understand how the value has changed, and the frequency of those changes. Or rather, how big are the swings and how often are they occurring?

Time Series Anomaly Detection Methods

Let’s begin by looking at some time series anomaly detection (or deviation detection) methods that are commonly used to troubleshoot and identify process issues in plants around the world.

Absolute Change

This is the simplest form of deviation detection. For Absolute Change, we get a baseline average where things are running well, and when we’re down the road, sometime in the future, and things aren’t running so hot, we look back and see how much things have changed from the average.

Absolute change is used to see if there was a shift in the process that has made the operating conditions less than ideal. This is commonly used as a first pass when troubleshooting issues at process facilities.

Variability

Here we want to know if the variability has changed in some way. In this case, we’ll show the COV change between a good period and a bad period. COV is basically a way to take variations and normalize them based on the value. So high values don’t necessarily get a higher standard deviation than low values because they’re normalized.

Variability charts are commonly used to identify less consistent operating conditions and perhaps more variations in quality, energy usage, etc.

Standard Deviations

Anyone who’s done control charts in the past 30 years will be familiar with standard deviations. Here we take a period of data, get the average, calculate the standard deviation, and put limits up (+/- 3 standard deviations is pretty typical). Then, you evaluate where you’re out based on that.

Standard deviation is probably the most common way to identify how well the process is being controlled, and is used to define the operating limits.

Multi-Parameter

This is a more advanced method of deviation detection that we at dataPARC refer to as PCA Modelling. Here we take all the variables and put them together and model them against each other to narrow the range. Instead of having flat ranges, they’re often rate-dependent.

The benefit of PCA Modelling over the other anomaly detection methods, is that it gives us the ability to narrow the window and get an operating range that is specific to the rate and other current operating conditions.

Setting up Anomaly Detection

Now that we have a basic understanding of some methods for detecting anomalies in our manufacturing process, we can begin setting up our detection system. The steps below outline the process we usually take when setting anomaly detection up for our customers, and we typically advise them to take a similar approach when doing it themselves.

1. Select Your Tags

Simple enough. For any particular process area you’re going to have at least a handful of tags that you’re going to want to review to see if you can spot the problem. Find them, and, using your favorite time series data trending application (if you have one), or Excel (if you don’t), gather a fairly large set of data. Maybe a month or so.

At dataPARC, we’ve been performing time series anomaly detection for customers for years, so we actually built a deviation detection application to simplify a lot of these routine steps.

For instance, if we want, we can grab an entire process unit from a display graphic and drag it into our app without having to take the time to hunt for the individual tags themselves. Pretty cool, right?

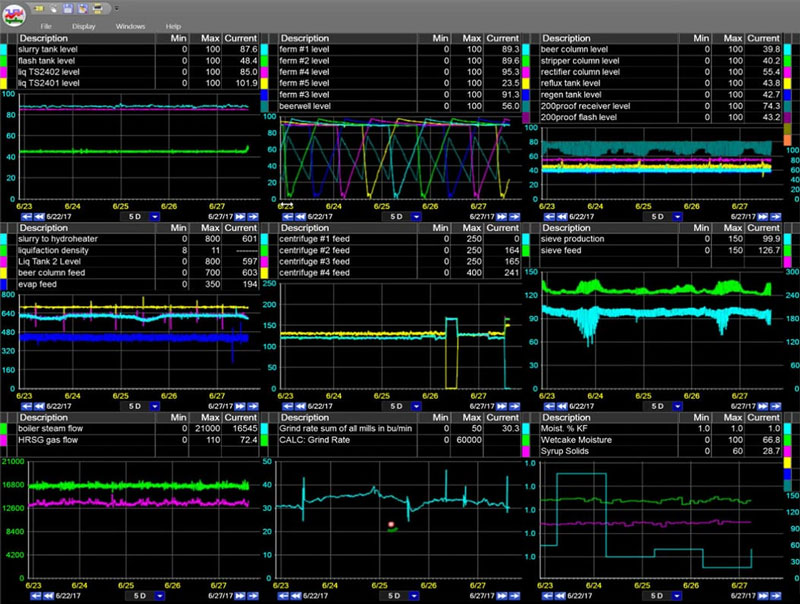

If we just pull up the process graphic for this part of the plant…

…we can quickly compile all the tags we want to review.

2. Filter out Downtime

This is a CRITICAL step, and should be applied before you even identify your good and bad periods. In order to accurately detect anomalies in your process data, you need to make sure to filter out any downs you may have had at your plant that will skew your numbers.

Downtime.

dataPARC’s PARCview application allows you to define thresholds to automatically identify and filter out downtime, so if you’re using a process analytics toolkit like PARCview, that’ll save you some time. If your analytics tools or your historian doesn’t have this capability, you can also just filter out the downs by hand in Excel. Regardless of how you do it, it’s a critical step.

Need to get better data into the hands of your process engineers? Check out our real-time process analytics tools & see how better data can lead to better decisions.

3. Identify Good Period

Now you’re going to want to review your data. Look back over the month or so of data you pulled and identify a period of time that everyone agrees the process was running “good”. This could be a week, two weeks… whatever makes sense for your process.

Things are running well here.

4. Identify Bad Period

Now that we have the base built, we need to find our “bad” period. Whether we’re waiting for a bad period to occur, or we’re proactively looking for bad periods as time goes on.

Here we’re having some trouble.

5. Analyze the Data

Yes, it’s important to understand the different anomaly detection methods, and yes, we’ve discussed the steps we need to take to build our very own time series anomaly detection system, but perhaps the most critical part of this whole process is analyzing the data after we’ve become aware of the deviations. This is how we pinpoint which tags – which part of our process – is giving us problems.

Deviation Analysis is a pretty big topic that we’ve covered extensively in another post.

Looking Ahead

Anomaly detection systems are great for being able to quickly identify key process changes, and really the system should be available to people at nearly level of your operation. For effective troubleshooting and analysis, everyone from the operator, the process engineer, maintenance, management… they all need to have visibility into this data and the ability to provide input.

Properly configured, you should be able to identify roughly what your problem is, within 5 tags of the problem, in 5 minutes.

So, when management asks “what’s changed, and how do we fix it?”, just tell them to give you 5 minutes.