In this blog, we explore the differences between Historians vs. SCADA, and why the conversation shouldn’t be about choosing one over the other. Instead, we’ll break down how these technologies work together to create a comprehensive operational data ecosystem, empowering teams to monitor processes in real-time and analyze historical data for long-term improvement.

Fast, scalable data historian at a fraction of the cost. Check out the dataPARC Historian.

The Shift from Control to Insight

Traditional SCADA systems are built for one primary purpose: supervisory control and real-time monitoring. They aggregate process data from PLCs, RTUs, and DCS systems, visualize key variables, and enable operators to maintain stable production conditions. SCADA excels at near-instant response to alarms, setpoint changes, and interlocks, ensuring safety and reliability at the equipment level.

As manufacturing evolves toward data-driven operations, the expectations of plant information systems have expanded. Engineers need not only to see what is happening now, but also to understand how and why it happened, and to predict what will happen next. This requires high-resolution, long-term process data that can be analyzed across shifts, batches, or production campaigns to uncover patterns and correlations that short-term SCADA data cannot provide.

SCADA’s typical data retention spans hours to days. Many legacy systems overwrite data after 48-72 hours or downsample to 1-minute averages, losing high-frequency signal detail critical for identifying transient upsets or oscillatory behavior. This limited data history is insufficient for advanced analytics, root cause investigations, or machine learning models that rely on continuous, unbroken time-series data. Additionally, data is only accessible to a limited number of clients in the control room. Further restricting the analytics and ability to use ones own data.

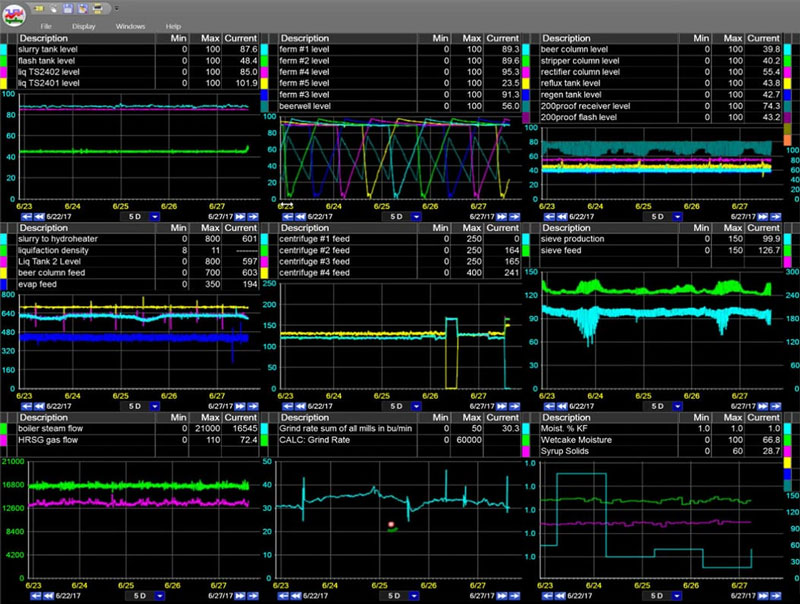

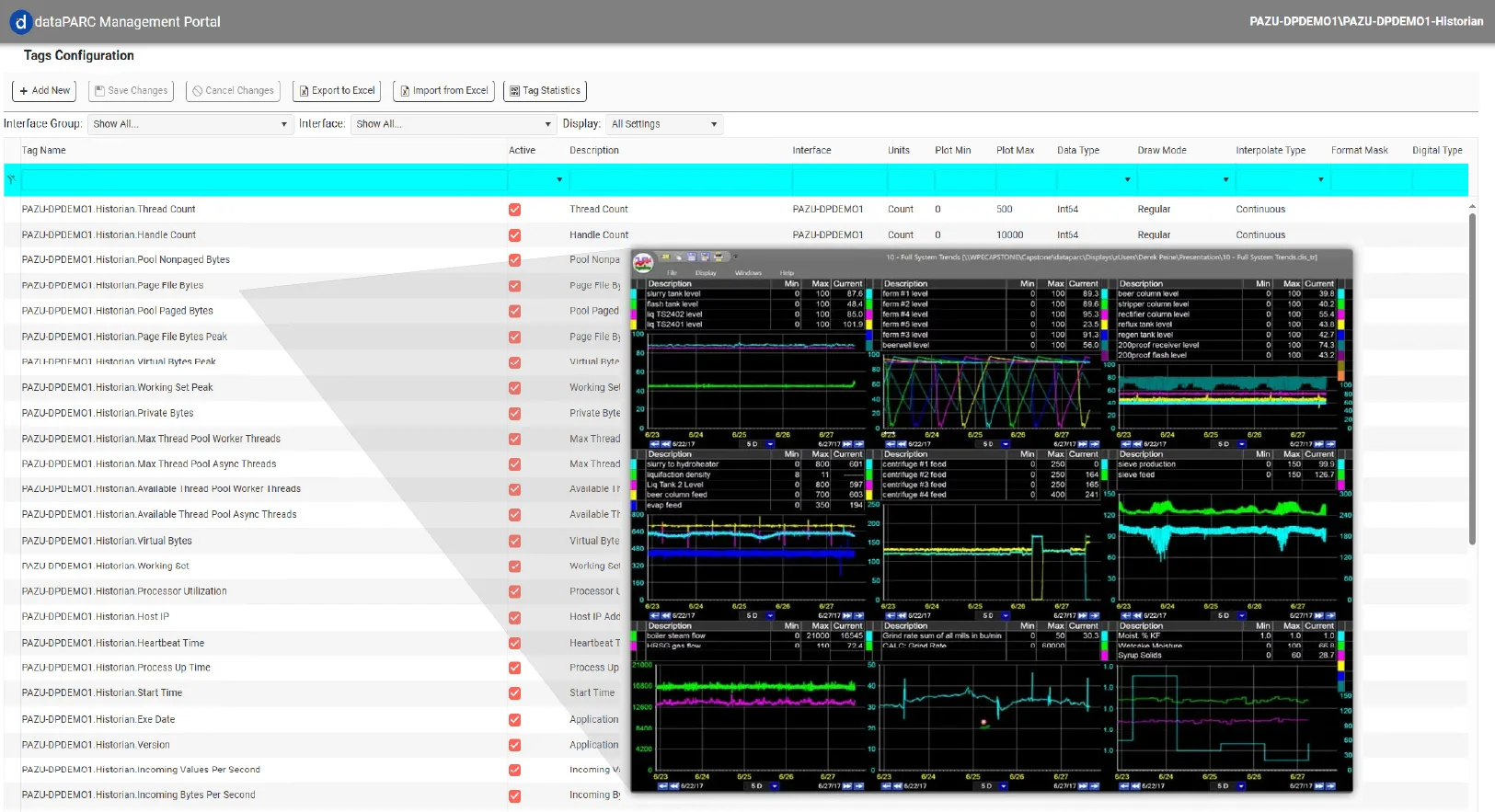

A process historian, such as dataPARC Historian, is engineered specifically to fill that gap. It continuously captures time-series data at full resolution and stores it efficiently with no end date, preserving context and granularity. This gives engineers the ability to trend years of process behavior, correlate production data with quality metrics, and analyze energy or throughput efficiency over time.

By extending the focus from real-time control to long-term insight, a historian transforms data from an operational necessity into a strategic asset. It becomes the foundation for continuous improvement, predictive analytics, and smarter manufacturing decisions.

What Makes a Time Series Historian Different

While SCADA systems are designed for real-time monitoring and control, process historians are architected for long-term data collection, high-frequency storage, and advanced analysis. The distinction is not only in data retention, but in data integrity, accessibility, and context.

Historians capture and preserve high-resolution time-series data from hundreds or thousands of process tags across multiple systems. Rather than downsampling or overwriting old values, a historian continuously appends data, maintaining both raw and aggregated datasets. This approach allows engineers to analyze process conditions at any point in time without losing granularity.

Where SCADA provides operator control and alarms, the historian captures complete time-series data behind every trend, event, and decision, enabling deep analysis and performance improvement.

Unlike SCADA, which is primarily operator-facing, historians are used by a wide range of plant roles. Process engineers trend and compare conditions across batches or product grades. Reliability teams correlate downtime data with vibration or temperature profiles. Quality teams overlay lab results on process variables. Managers use the same underlying data for KPI dashboards and performance reviews.

In the context of digital transformation, the historian serves as the core data infrastructure. It provides the foundation for advanced applications such as statistical process control (SPC), predictive quality, model-based optimization, and machine learning models for anomaly detection, soft sensors for real-time quality prediction, and reinforcement learning algorithms for advanced process control. By ensuring all users access the same validated, time-aligned data, the historian supports consistent decision-making from the control room to corporate management.

With the dataPARC Historian, process data is preserved at its original fidelity, easily retrievable, and contextually enriched. Engineers gain the visibility needed to detect process drift, evaluate optimization projects, and quantify improvements with confidence.

Use Case Comparison: Historians vs. SCADA in Action

SCADA and historian systems often work together, but their roles in process analysis differ fundamentally. SCADA provides short-term situational awareness, while a historian provides long-term analytical capability. The distinction becomes clear when engineers attempt to identify performance trends, evaluate root causes, or validate process improvements over time.

Below are several common industrial use cases that highlight how each system performs in practice.

Downtime Analysis

With SCADA: Operators can observe when a piece of equipment trips or an alarm occurs, but historical data is often limited to the current or previous shift. Event context, such as upstream and downstream process conditions or operator actions, is typically missing. Without that history, it becomes difficult to differentiate between mechanical, process, or control-related failures.

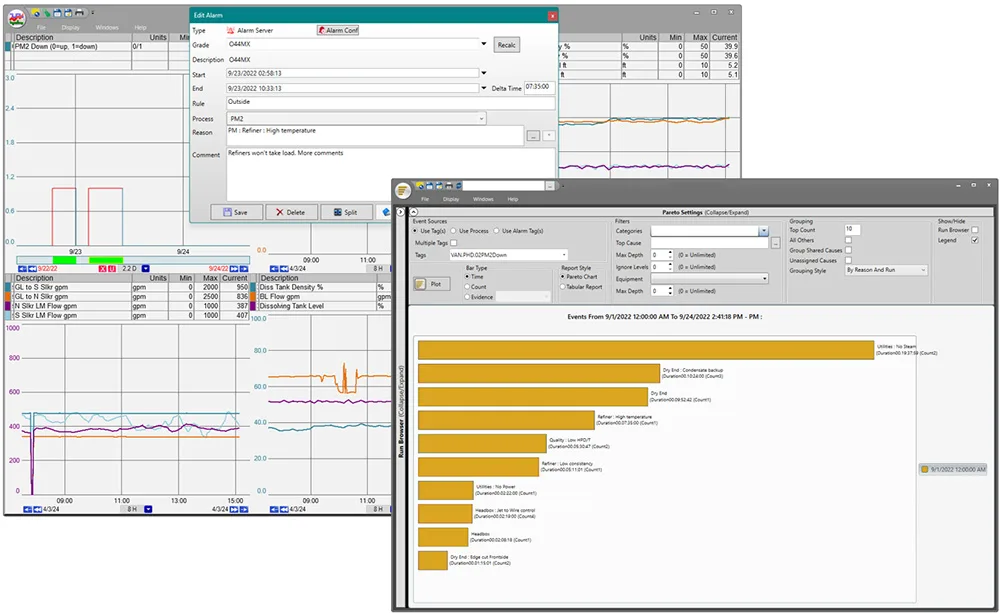

With the dataPARC Historian: Downtime events are automatically logged with precise timestamps and can be correlated with process data, alarms, and operator notes. Engineers can filter by equipment, product grade, or time period to detect recurring issues and determine whether failures are driven by process variability, equipment wear, or human intervention. Historical resolution allows for frequency analysis, Pareto ranking, and correlation with production rate or ambient conditions. This can be done on the plant floor or in an office; the data is more versatile and accessible with the dataPARC Historian.

With historical data, users can review past downtime events in a Pareto chart to identify the most common causes and reduce future occurrences.

Quality Troubleshooting

With SCADA: Operators can view real-time values such as temperature, pressure, or flow, but comparing performance across product runs or shifts requires manual exports and spreadsheet analysis. SCADA trends often lack synchronized lab data or product identifiers, making it difficult to isolate when a deviation began or which batch was affected.

With the dataPARC Historian: Quality and process data can be trended together at high resolution. Users can overlay laboratory results, product codes, and process setpoints to determine exactly when deviations occurred and under what conditions. By comparing good and bad batches, engineers can apply multivariate analysis (PCA, PLS) to identify which process variables, or combinations thereof, correlate most strongly with quality deviations, enabling statistical process control and feed-forward corrections.

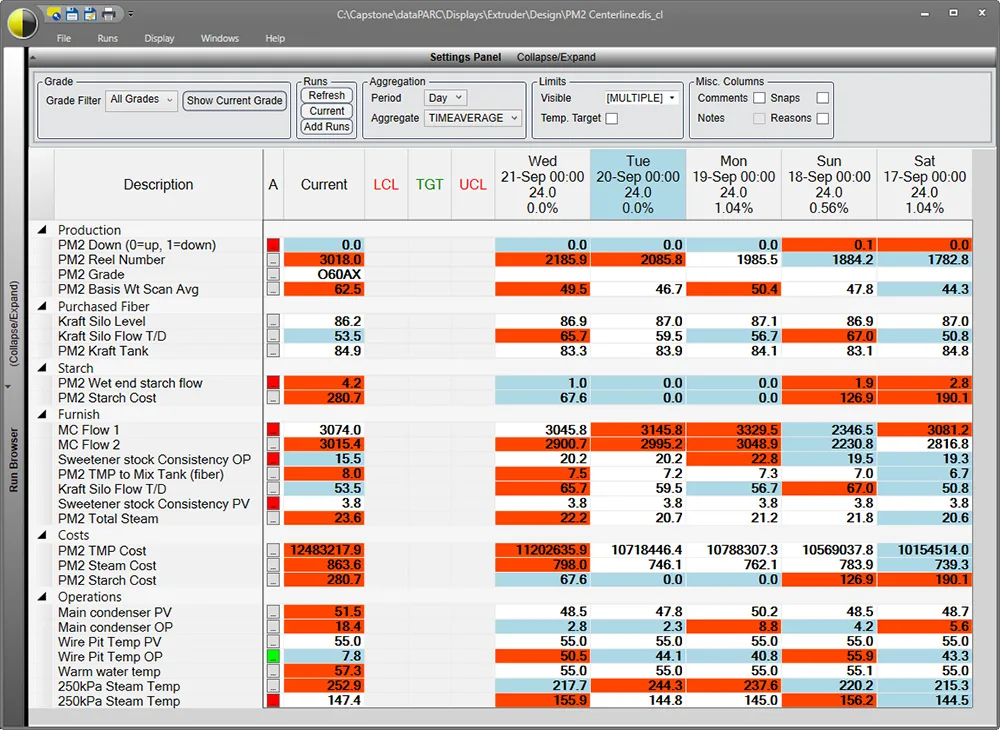

A unique PARCview display called a Centerline can be used for quality control and to compare historical data from runs or batches, highlighting values that are running higher or lower than average, pointing out which variables to look into.

Production Cost and Energy Efficiency

With SCADA: Energy or material usage is typically monitored in real-time, but cost calculations are performed later in separate business systems. There is limited visibility into cost per unit produced, and operators have no immediate feedback for process optimization.

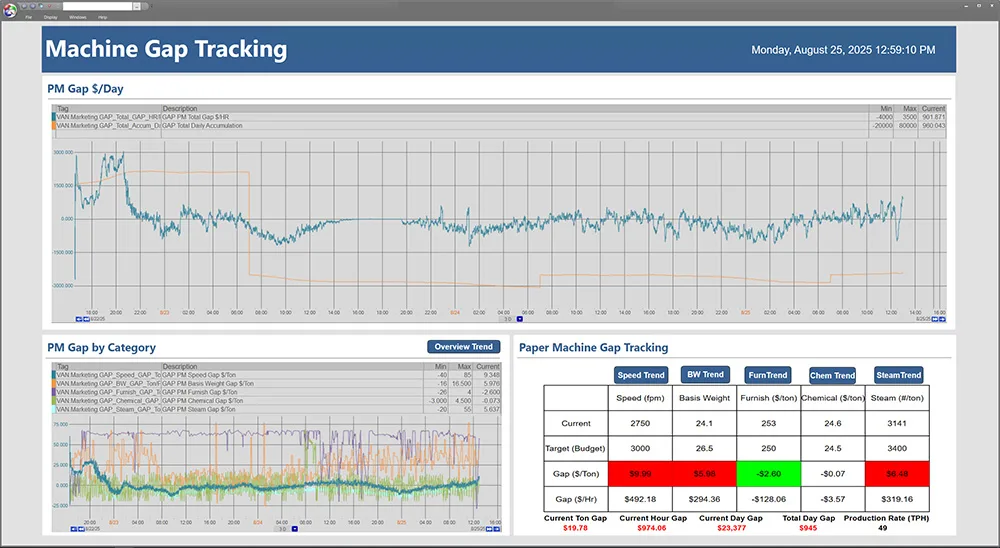

With the dataPARC Historian: Energy and utility consumption can be combined with process throughput and material usage to calculate real-time cost per unit. Historical analysis enables correlation between operating conditions, equipment performance, and energy intensity. This helps engineers identify the most efficient operating zones, quantify improvement projects, and justify process changes with actual cost data.

Having access to historical data can enable calculations such as gap tracking to see how the process is performing now compared to past production.

Shift-to-Shift Communication

With SCADA: Shift handoffs rely heavily on operator notes or verbal communication. Data visibility is limited to what is currently displayed on the HMI, and trend history often resets each day. Important context from prior shifts is easily lost, leading to inconsistent troubleshooting and repeated investigations.

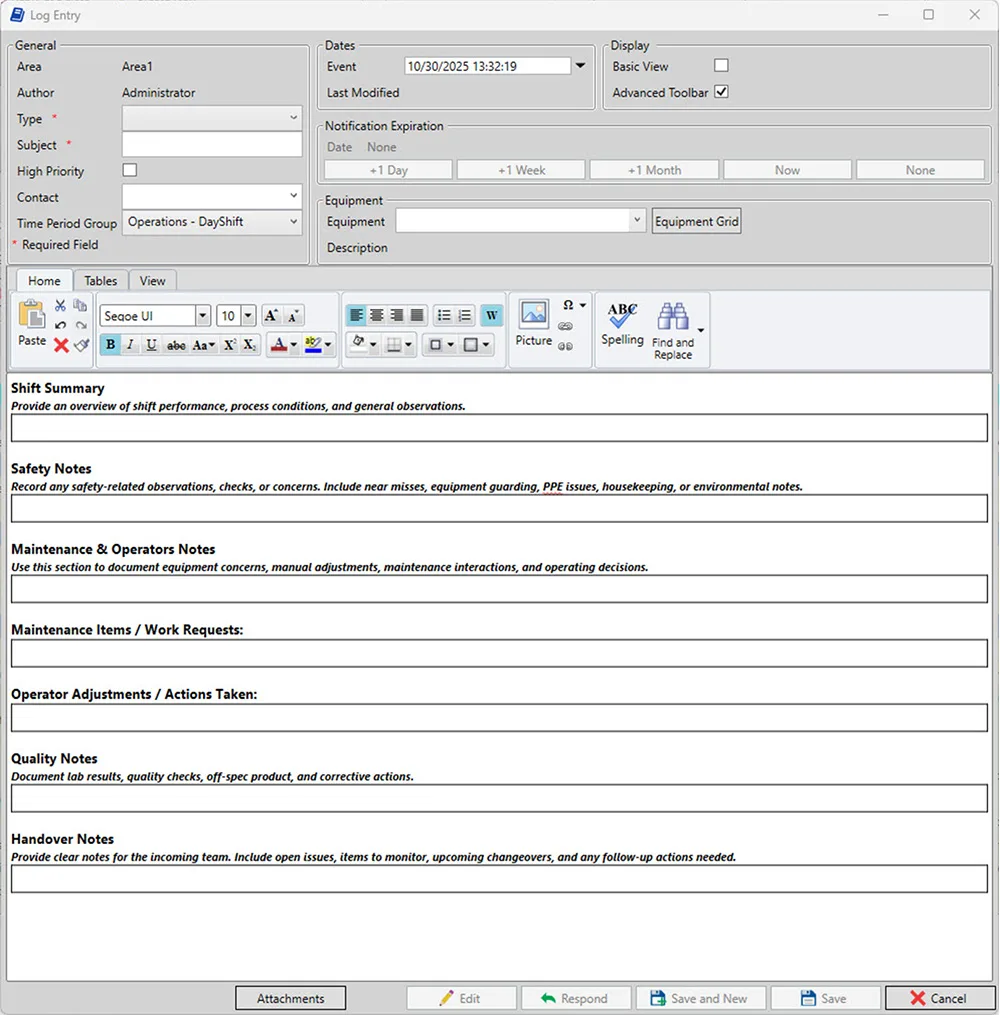

With the dataPARC Historian: All users have access to the same historical record of trends, alarms, and operator comments through integrated tools like Logbook. Engineers can review prior shift activity, confirm corrective actions, and analyze recurring problems using actual process data. This consistency reduces downtime and improves alignment between operations and engineering teams.

While Logbook is a feature of PARCview rather than the dataPARC Historian, it still illustrates the importance of historical data and having access to past information.

These examples illustrate a core principle: SCADA systems are designed for control, while historians are designed for understanding. A historian such as the dataPARC Historian allows teams to move beyond reactionary decision-making and into continuous improvement, where every data point contributes to process optimization and reliability.

Why Smart Teams Move Beyond SCADA for Analytics

As process industries evolve toward data-centric decision-making, engineers increasingly recognize that SCADA alone cannot meet the analytical and diagnostic requirements of modern manufacturing. SCADA systems are indispensable for control and safety, but their architectures were never designed to handle the volume, velocity, and variety of data generated by today’s plants.

Many plants attempt to use SCADA as a makeshift historian by exporting data to spreadsheets or external databases, but this approach introduces its own issues. Time synchronization between sources becomes unreliable, metadata is lost, and manual manipulation can easily introduce errors. The lack of contextual information, such as product grade, equipment state, or lab results, makes long-term analysis cumbersome and inconsistent.

A dedicated historian solves these problems by providing high-resolution, contextualized time-series data that can be used for advanced analytics, model validation, and continuous improvement. data designed for deep analysis. It provides the foundation for advanced troubleshooting, model development, and continuous improvement efforts across the plant. Suppose your facility is looking to elevate its data strategy. In that case, deploying a historian is a critical first step, especially as AI and third-party analytics tools increasingly rely on rich historical data to drive results.

Moving beyond SCADA also supports broader analytics initiatives, such as:

- Predictive maintenance, using long-term vibration, temperature, and runtime data to forecast equipment failures.

- Energy and resource optimization, correlating utility usage with production rate and ambient conditions.

- Process capability and variability analysis, leveraging SPC techniques to quantify stability over time.

- Production traceability, linking process data to batch records, lab results, and final product quality.

By adopting a historian-driven architecture, plants can transition from event response to data-driven optimization. Instead of relying solely on operator intuition, engineers have access to validated, time-aligned data that supports quantitative analysis. This accelerates root cause investigations, shortens troubleshooting cycles, and provides the empirical foundation for continuous improvement and AI-driven initiatives.

In short, SCADA keeps the process running safely, but a historian ensures that it runs efficiently and intelligently.

How dataPARC Bridges the Gap

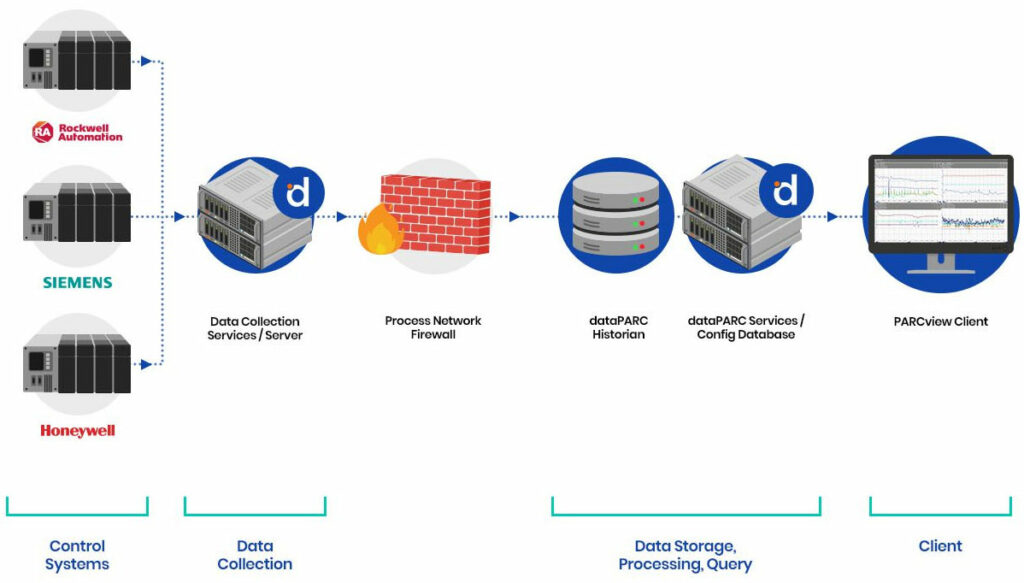

In most manufacturing environments, SCADA systems are the backbone of real-time control, while historians serve as the foundation for long-term analysis. However, the real value emerges when these two systems operate seamlessly together. dataPARC Historian is designed to bridge that gap by combining high-speed data collection with scalable, contextualized analytics that span both operational and engineering domains.

The dataPARC Historain supports standard industrial protocols such as OPC DA, OPC UA, and gRPC, allowing engineers to integrate information from process automation, laboratory, and enterprise systems without extensive custom interfaces. This integration ensures that process variables, lab data, and production metrics are all synchronized in time and stored with consistent metadata.

The result is a centralized data layer that supports multiple use cases:

- Operations benefit from real-time dashboards and alarm summaries.

- Process engineers access detailed time-series data for analysis, model validation, and troubleshooting.

- Quality teams correlate product data with process conditions.

- Management gains visibility into KPIs, production efficiency, and cost per unit.

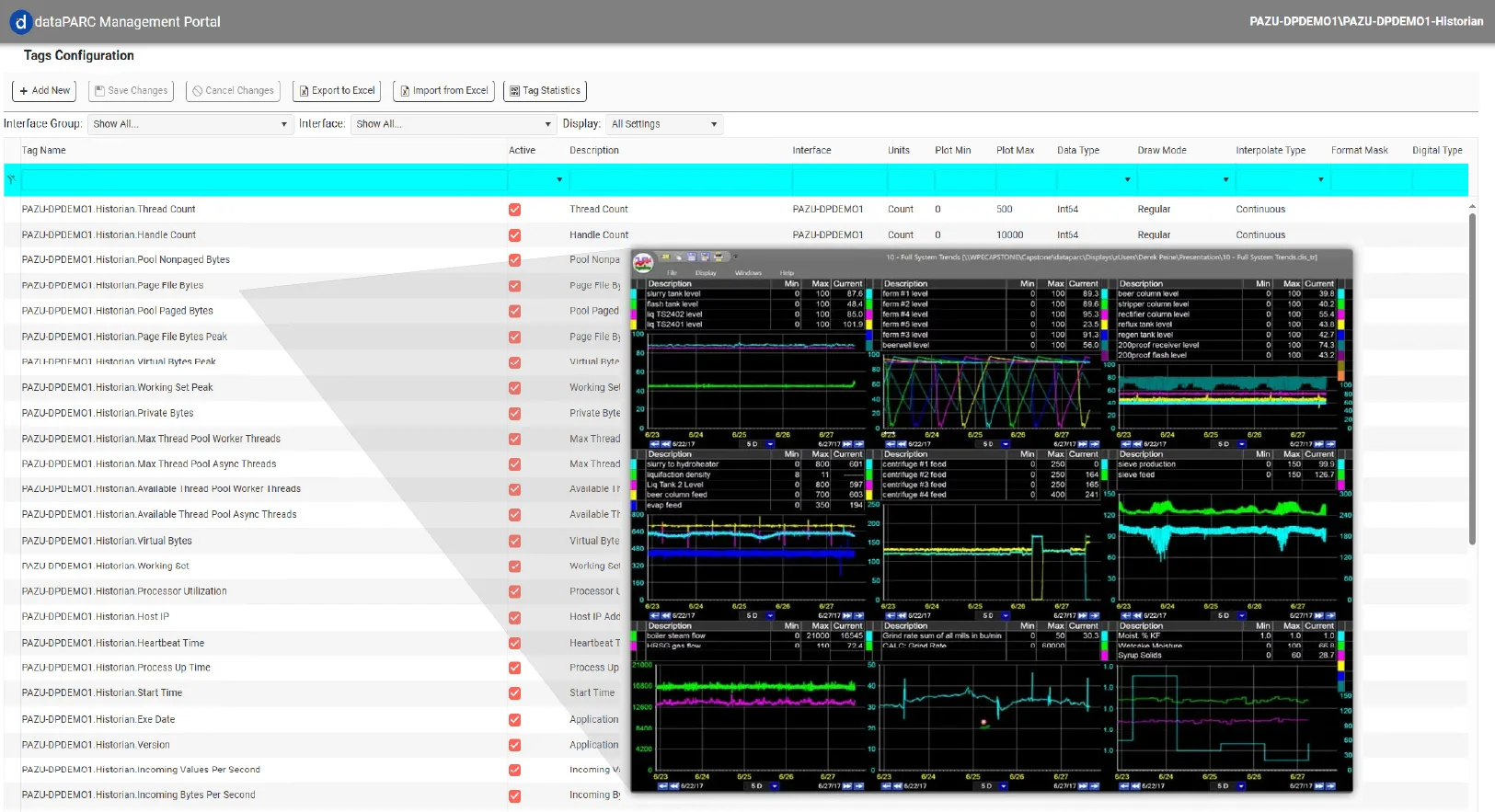

dataPARC is not only a data repository but also a contextual analysis platform. Engineers can apply product-specific limits, aggregate by batch or shift, and trend multiple variables across timeframes without exporting data to spreadsheets.

With PARCview and the dataPARC historian working together, this enables predictive quality monitoring, early warning alerts, and deviation analysis without the need for additional layers of data transformation. The built-in Logbook feature provides a structured way to capture operator and engineer comments, aligning human insight with process data for a more complete operational record.

Unlike many historians and visualization systems that require specialized IT support for configuration, dataPARC is designed to be engineer-accessible. Users can configure tags, build dashboards, and analyze data through an intuitive interface that mirrors the way engineers think about process relationships. This accessibility ensures faster adoption and more consistent use across departments.

In practice, dataPARC becomes the bridge between control and insight. It connects the immediacy of SCADA with the analytical depth of a historian, empowering teams to not only see what is happening but to understand why. By combining real-time visibility with historical context, manufacturers can optimize process performance, improve product quality, and enable smarter, data-driven operations.

Fast, scalable data historian at a fraction of the cost. Check out the dataPARC Historian.

:Choose Tools That Scale with You

The evolution from SCADA-based control to historian-driven insight represents a shift in how process data is valued and used. SCADA systems remain essential for real-time control and operator safety, but they were never designed to store, contextualize, or analyze data at the scale required for modern process optimization.

For process engineers, long-term visibility is critical. Without historical context, it is impossible to verify performance improvements, identify gradual process drift, or support data-driven initiatives such as model-based control and predictive analytics. A historian provides that foundation by ensuring every tag, alarm, and event is stored, time-synchronized, and accessible for analysis.

The dataPARC Historian coupled with PARCview expands this capability by integrating real-time visualization, contextual analytics, and cross-system connectivity within a single environment. Engineers can move seamlessly from live process monitoring to historical review, from root cause analysis to cost and quality evaluation, all without losing data or context.

Selecting tools that scale with your operation is essential. A system that captures high-frequency data today must also support the analytics and digital transformation goals of tomorrow. With dataPARC, teams gain a platform built for both operational reliability and long-term improvement, ensuring that process data becomes not just a record of what happened, but a resource for continual learning and optimization.

FAQs: Historians vs. SCADA

- Why is long-term historical data important for process optimization?

Long-term data allows engineers to detect slow process drift, identify recurring downtime events, and verify the impact of process changes. Without it, continuous improvement becomes guesswork. High-resolution historical data supports statistical process control, predictive quality analysis, and model-based decision-making, essential components of advanced manufacturing strategies. - How does a historian integrate with SCADA and other plant systems?

A historian connects to SCADA, DCS, and PLC systems through standard industrial protocols such as OPCDA, OPCUA, OPCHDA, or other types. Visualization tools like PARCview can then interface with the historian, laboratory information systems, ERP databases, and maintenance systems. This integration allows engineers to align production data with quality, cost, and asset information for a complete process view. - What types of analysis are enabled by dataPARC Historian that are not possible in SCADA?

With a full historian, teams open the door to different types of analysis, either with PARCview or third-party integrations. Sites can perform multi-variable correlation, energy intensity analysis, and predictive model validation. They can also create dashboards that combine process, lab, and business data in one view. These capabilities transform data from a monitoring tool into a foundation for continuous improvement, reliability analysis, and strategic decision-making. - What are the performance benefits of a historian compared to SCADA data storage?

Historians use specialized compression, indexing, and retrieval algorithms to store large volumes of time-series data efficiently. Query times remain near real time, even for multi-year datasets. This allows engineers to trend data across long time spans without performance degradation or loss of detail. - How does the dataPARC historian improve collaboration between operators and engineering?

dataPARC Historian provides shared access to high-resolution data, dashboards, and contextual tools such as Logbook. Operators, engineers, and quality teams can all view the same historical record, review annotated trends, and correlate process data with comments or events. This unified visibility reduces communication gaps and ensures consistency across shifts and departments. It is your data and everyone at your site who needs access to the data should have access to it.

Building The Smart Factory

A Guide to Technology and Software in Manufacturing for a Data-Drive Plant