In this blog, we explore why AI insights often remain siloed and the real cost of inaccessibility across operations, quality, engineering, and leadership. We break down the technical and organizational barriers that prevent predictions and analytics from reaching the people who need them. Finally, we discuss how making AI insights accessible transforms isolated outputs into actionable intelligence that drives faster, more confident decision-making across the enterprise.

Ready your Manufacturing Ecosystem with a Fast Data Historian and Process Monitoring

Your company spent six figures on AI, and now the models are trained. The predictions are accurate. Insights are being generated every minute of every day.

So why is your operations team still making decisions based on gut feel?

Why are engineers troubleshooting issues that your predictive models already flagged?

Why does your quality team discover deviations hours after AI detected them?

The problem isn’t that AI doesn’t work.

The problem is that no one can access the insights.

This is the AI silo problem, a lack of AI accessibility.

Organizations invest heavily in machine learning platforms, predictive analytics, and advanced AI systems designed to generate powerful insights. But those AI insights often remain locked inside specialized tools, accessible only to the data scientists and analysts who built the models.

Meanwhile, the people who could act on those insights never see them. Operations managers miss predictive maintenance alerts that could prevent downtime. Quality engineers lack real-time access to anomaly detection results. Plant leaders are asked to trust decisions they can’t validate because the AI insights aren’t visible where work actually happens.

AI insights accessibility is what determines whether your AI investment delivers real value or becomes an expensive science project. When insights stay siloed in technical environments, disconnected from the operational systems where decisions are made, ROI quietly disappears. The gap between generating an insight and acting on it is where value is lost.

Breaking down AI silos and improving the accessibility of AI insights isn’t just a technology challenge. It’s an organizational one. When AI insights are delivered to the people closest to the process, in tools they already use, AI moves from an abstract capability to a practical advantage, enabling faster, more confident decisions at every level. If this is a problem at your site, you need an AI enabler.

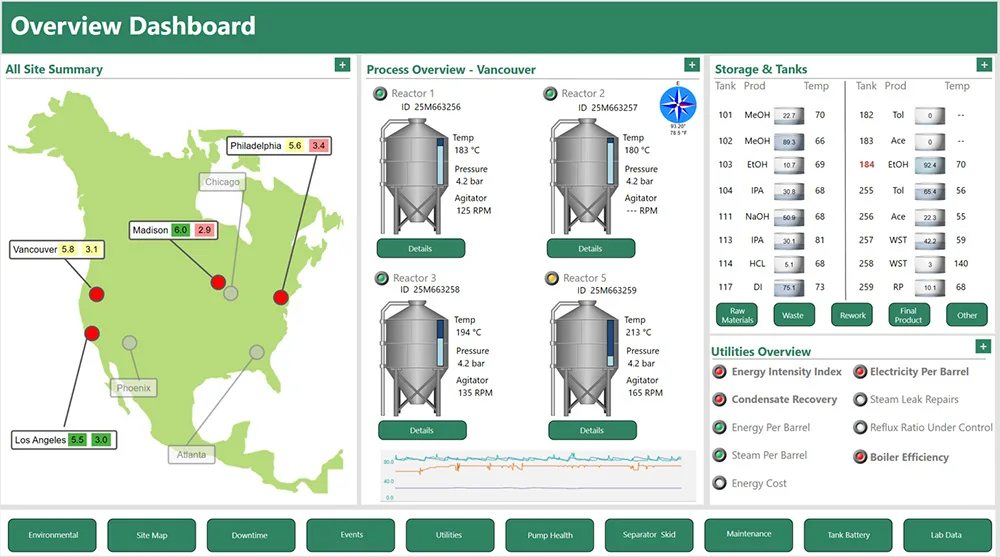

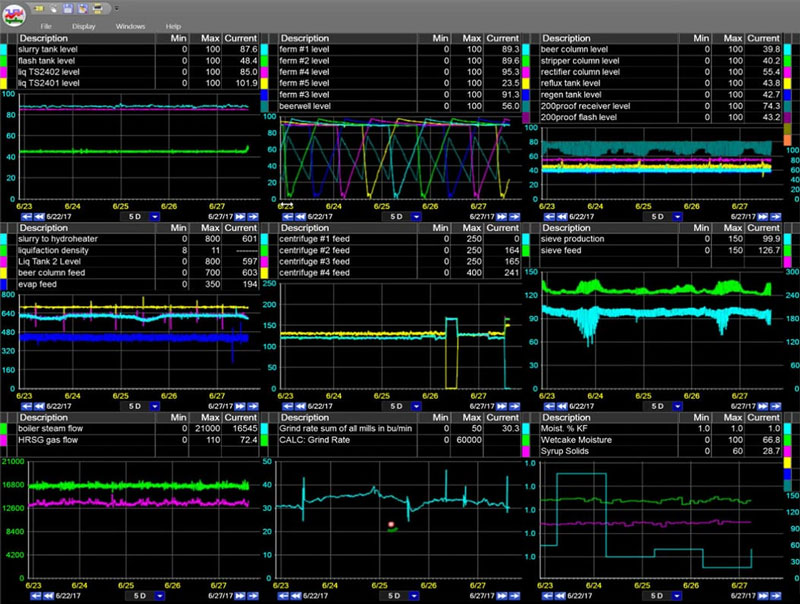

This dashboard is an example of data integrated and all in one place. The goal would be to get your site’s AI insights onto an operational or overview dashboard like this, one that already exists and is being used.

The Hidden Cost of Inaccessible AI Insights

When AI insights aren’t accessible to the people who need them, the impact isn’t subtle. The cost shows up daily across engineering, operations, quality, and leadership. What should be a competitive advantage quietly turns into duplicated work, missed opportunities, and preventable losses.

Engineers Recreate Insights That Already Exist

Your data science team built a model that predicts product quality based on process conditions. The model works. The correlations are strong. The insight is real.

But your process engineers can’t access it because the data is in another system.

So they spend hours manually trending variables, exporting data, and recreating the same analysis. Weeks later, they reach similar conclusions that the AI surfaced almost instantly. The insight existed. The value existed. But because the AI insight wasn’t accessible, the work happened twice, and the benefit arrived too late.

Operations Miss Warnings They Never See

Your predictive maintenance model detects bearing vibration patterns that indicate failure within 48 hours. The prediction is accurate. The alert fires exactly as designed.

But it lives in a data science dashboard, one that operations never checks.

Two days later, the bearing fails during a critical production run. Unplanned downtime costs tens of thousands of dollars. The AI did its job. The insight just never reached the people who could act on it.

Quality Discovers Problems After the Damage Is Done

AI-based anomaly detection identifies subtle process deviations that correlate with downstream quality issues. The signal appears early, long before defects are visible in finished product data.

But quality engineers rely on traditional SPC charts and post-run analysis. By the time the issue is discovered, off-spec material has already been produced. Rework, waste, and customer complaints follow. The early warning was there, hidden in a system the quality team couldn’t access.

With AI integration, quality estimations can be made in real-time during production. Meaning, the process can be adjusted, and the off-quality can be prevented.

Leadership Decides Without the Full Picture

Strategic decisions around capacity, efficiency improvements, and capital investments should be informed by the best intelligence available. Yet when AI insights remain isolated in technical environments, leadership teams fall back on lagging indicators and summarized reports.

Executives can’t trust what they can’t see or validate themselves. Without accessible AI insights, decisions remain slower, less confident, and more reactive than they need to be.

When AI Insights Aren’t Accessible, Value Disappears

Organizations invest in AI to make better, faster decisions. Then they undermine that investment by keeping insights out of reach of decision-makers.

AI insights accessibility isn’t a nice-to-have feature. It’s the difference between AI delivering measurable value and AI becoming an expensive science project. When insights stay siloed, AI doesn’t fail technically; it fails operationally.

Why AI Insights Stay Siloed

The AI insights accessibility problem isn’t caused by neglect or bad intent. It’s the natural result of AI tools and operational systems evolving in parallel, not together. Over time, technical and organizational barriers form that prevent insights from flowing to the people who need them most.

AI Platforms Aren’t Built for Operational Use

Data scientists do their work in Azure ML, AWS SageMaker, Python notebooks, or specialized analytics platforms like SEEQ. These environments are excellent for developing and validating models, but they were never designed for day-to-day operational decision-making.

AI outputs typically land in databases, APIs, or technical dashboards that operations teams don’t monitor and often can’t access. Credentials, unfamiliar interfaces, and disconnected tools ensure that even accurate, valuable insights stay out of reach of operators and engineers. Even just the time it takes to open another system is enough to prevent it from being opened.

Curious what an AI-ready data ecosystem looks like? This video breaks it down

Technical Expertise Becomes an Access Barrier

Querying an API, parsing JSON, or navigating a Jupyter notebook isn’t part of most operators’ or process engineers’ job descriptions. Even when access technically exists, the practical friction of using unfamiliar tools is enough to stop adoption.

When accessing AI insights requires new skills, new logins, or new workflows, teams default to what they already know. The path of least resistance becomes ignoring the AI and relying on traditional methods, even when better insight is available.

Integration Work Falls Between Teams

Making AI insights accessible means integrating model outputs into existing operational dashboards, historians, and workflows. That work takes time and coordination.

IT has competing priorities. Data science teams are focused on model accuracy and performance, not visualization or operational context. Operations assumes someone else is responsible. Without clear ownership, integration never happens, and insights remain trapped where they were created.

Organizational Silos Reinforce Technical Ones

Data science, IT, and operations often operate under different management structures with different success metrics. Data science is rewarded for model performance. Operations are measured on uptime, throughput, and quality. Without intentional alignment, AI insights naturally stay within the team that produced them.

True AI insights accessibility requires more than technical connectivity. It requires organizational alignment and a deliberate strategy to operationalize AI across teams.

The Missing Layer Isn’t AI-It’s Accessibility

The irony is that the hardest part is already solved. The models work. The data exists. The infrastructure is in place.

What’s missing is the connectivity layer that makes AI outputs as accessible as any other operational data, visible in the tools people already use, in the context they understand, and at the moment decisions are made.

dataPARC: Enabling AI Insights Accessibility

The breakthrough in AI insights accessibility isn’t building better models or generating more predictions. It’s ensuring those insights reach the people who can act on them, in the tools they already use, without requiring new skills or disruptive workflow changes.

One Connectivity Layer for a Fragmented Data Landscape

AI platforms, Python models, cloud services like Azure ML and AWS SageMaker, specialized analytics tools, traditional historians, DCS and SCADA systems, MES platforms, laboratory systems, and enterprise databases all generate valuable data, but they don’t speak the same language.

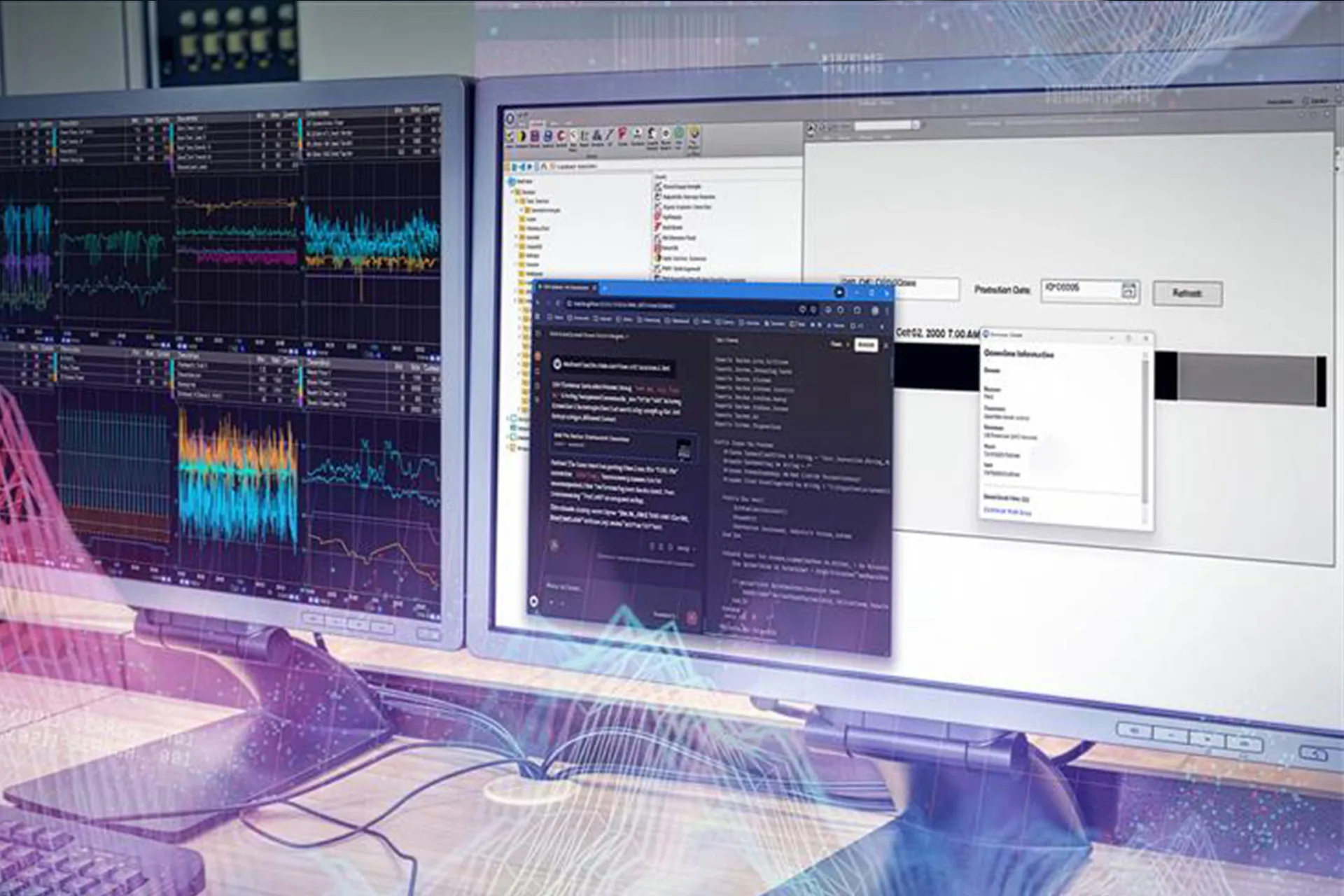

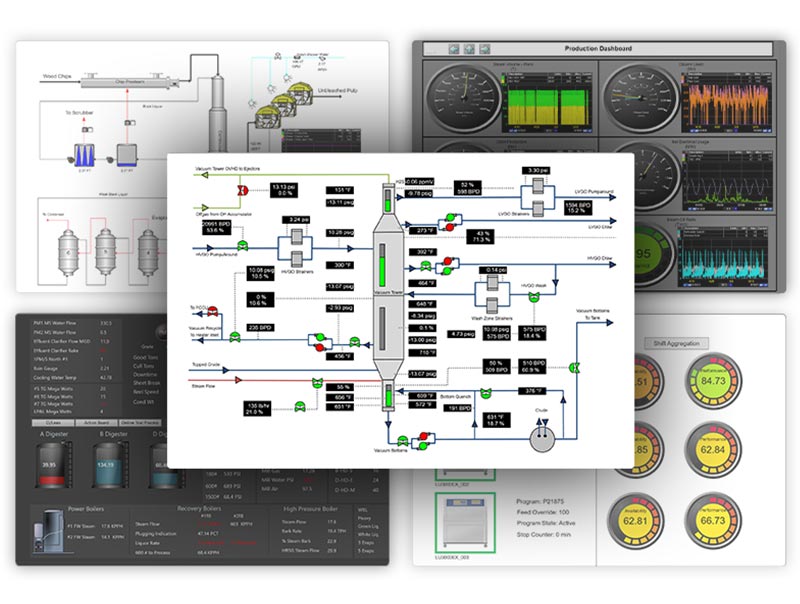

PARCview provides a single source of truth to view all your industry data. Import AI data to view it right alongside process and lab information.

dataPARC serves as the connectivity layer that brings these sources together. AI outputs are ingested alongside operational data and made accessible through a single interface. Instead of managing disconnected systems, organizations gain a unified view where insights are no longer isolated by tool, team, or technology.

AI Insights Appear Where Work Already Happens

Operations managers shouldn’t have to log into a data science platform to see predictive maintenance alerts. Quality engineers shouldn’t need Python expertise to monitor anomaly detection outputs. Process engineers shouldn’t need API documentation to access model predictions.

With dataPARC, AI insights surface in the same trending tools, dashboards, and displays teams already use to monitor operations. Predictions, confidence scores, and anomaly indicators appear alongside familiar process variables, integrating naturally into existing workflows instead of forcing teams to adopt new ones.

Accessibility Drives Adoption

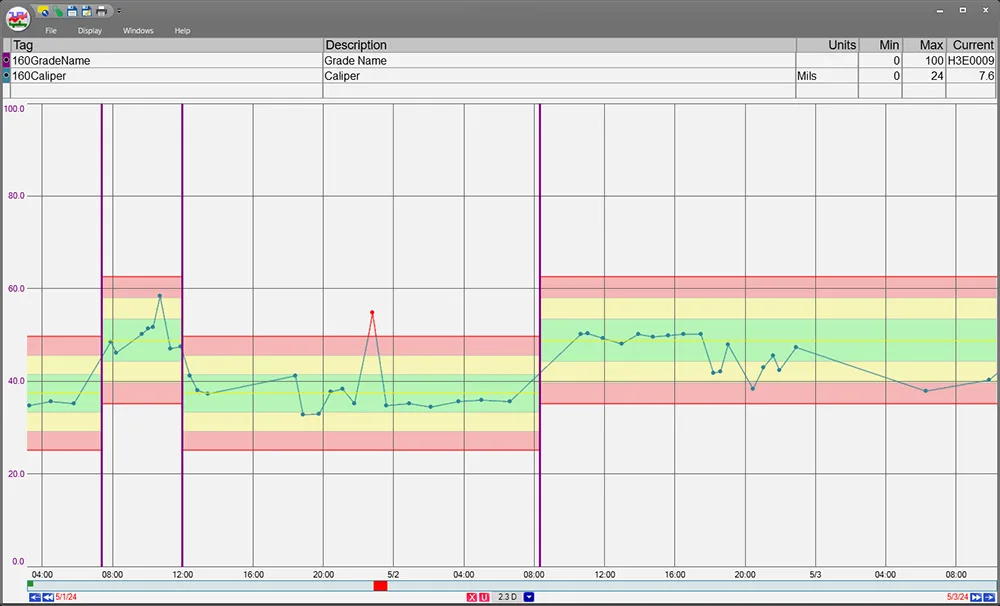

When AI outputs appear as data tags next to temperature, pressure, and flow measurements, they become immediately usable. Operators trend them. Engineers correlate them with process conditions. Quality teams apply limits and alerts.

The technical complexity behind the models stays hidden. What remains visible is the value. By removing access barriers, AI insights become part of daily decision-making rather than something reserved for specialized teams.

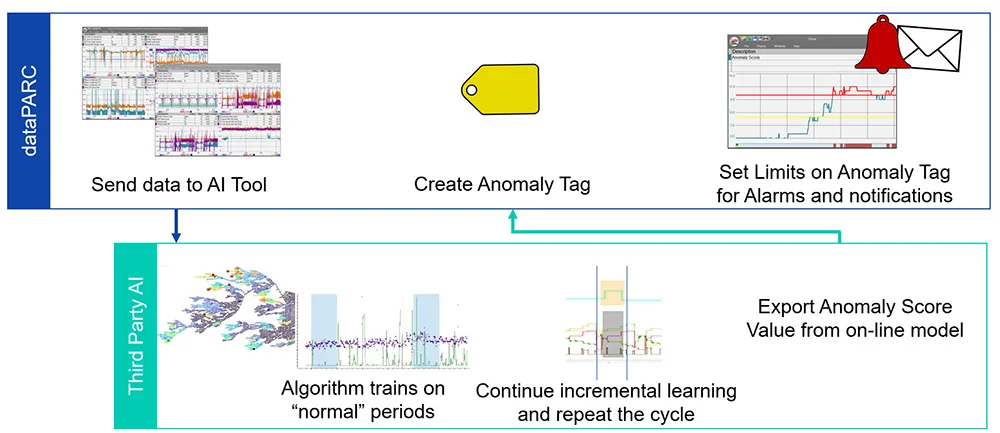

From PARCview, data can be sent to an AI Software for analysis, then results inserted back into dataPARC as a tag. From here users can treat it as any other data tag, adding it to an alarm, dashboard or trend.

Context Builds Trust in AI Insights

AI predictions are most valuable when viewed in context. dataPARC allows users to see real-time AI outputs alongside the historical operational data that produced them. Teams can correlate predictions with actual outcomes, understand how models behave under different conditions, and evaluate performance over time.

This combination of real-time visibility and historical context builds trust. Teams learn when to act confidently on AI insights and when deeper investigation is needed.

From AI Capability to Organizational Impact

The power of dataPARC isn’t in replacing existing AI tools. It’s in making them accessible and actionable across the organization. By bridging the last mile between insight generation and insight consumption, dataPARC turns AI insights accessibility from a persistent challenge into a practical reality.

AI doesn’t deliver value when it generates insights. It delivers value when those insights are seen, trusted, and acted on.

The Enterprise Impact of AI Insights

When AI insights become accessible across the organization, the impact extends far beyond better dashboards for data scientists. Every role gains new capabilities, and the cumulative effect reshapes how the entire operation runs.

Operations Moves from Reactive to Proactive

Predictive maintenance alerts appear directly in operator dashboards alongside real-time equipment status. Instead of responding to failures, operations teams schedule maintenance during planned downtime based on AI predictions. Machine learning-driven optimization recommendations guide parameter adjustments as conditions change.

Operators don’t need to understand the algorithms behind the models. They simply see actionable intelligence in the same tools they already use. Downtime decreases, efficiency improves, and decision confidence increases.

Quality Intervenes Earlier and More Effectively

Anomaly detection outputs integrate directly into quality monitoring workflows, flagging subtle deviations hours before traditional methods would catch them. AI-driven quality predictions allow engineers to intervene early, adjusting processes before off-spec material is produced.

Correlations that once took days of manual analysis now appear instantly in familiar trending interfaces. Such efforts reduce waste, rework declines, and improve customer satisfaction.

Engineering Accelerates Validation and Optimization

Process engineers access AI model outputs alongside the operational data used to generate them. They validate predictions against actual outcomes, understand where models perform well, and identify opportunities for refinement.

What once required dedicated data science support becomes part of everyday engineering work. AI insights guide continuous improvement efforts instead of one-off investigations. Root cause analysis speeds up, and users better understand the process.

Leadership Decides with Shared, Visible Intelligence

Executive dashboards incorporate AI-driven KPIs and predictions that leaders can drill into, validate, and trust. Strategic decisions around capacity, efficiency investments, and operational priorities are informed by the same intelligence used on the plant floor. When AI insights are accessible at every level, alignment improves. Decisions are made faster, investment priorities become clearer, and a competitive advantage compounds.

From AI Capability to Enterprise Intelligence

AI insights accessibility transforms AI from a specialized technical capability into shared organizational intelligence. When insights are visible, trusted, and actionable across roles, the AI investments you’ve already made finally deliver enterprise-wide value.

Check out dataPARC’s real-time process data analytics tools & see how better data can lead to better decisions.

Conclusion: From Isolated AI to Organizational Intelligence

AI investments only pay off when insights reach the people who can act on them. The most sophisticated machine learning models, the most accurate predictions, and the most advanced analytics platforms deliver no value if they remain accessible only to the teams that built them. An AI enabler is the difference between AI as an expensive experiment and AI as a lasting competitive advantage.

The organizations winning with AI aren’t necessarily the ones with the largest data science teams or the most complex models. They’re the ones that have eliminated the AI data silo. They democratized access to AI insights across operations, quality, engineering, and leadership. They recognized that generating insights is only half the challenge. Delivering those insights to decision-makers, in the tools they already use and without requiring technical expertise, is where transformation actually happens.

dataPARC closes this gap by connecting AI platforms to operational systems and making model outputs as accessible as any other process data. AI predictions, anomaly indicators, and analytics results become visible, trusted, and actionable across the organization. Existing AI investments gain new life. Teams gain new capabilities. Intelligence flows seamlessly from insight generation to operational action.

If your predictive models and analytics remain trapped in technical silos, value is being left on the table every day. Break down those barriers, prioritize AI insights accessibility, and turn isolated outputs into shared organizational intelligence.

Your AI investment is too valuable to be limited by inaccessibility. Make sure the people who need your insights can actually get them.

FAQ: AI Data Accessibility

- What are AI data Silos?

AI data silos occur when machine learning models and predictions remain trapped in specialized platforms or technical environments, inaccessible to the operations, quality, and engineering teams who could act on those insights. Systems like dataPARC break down AI data silos by connecting AI outputs with operational data where all the data can be visible and actionable together. - Why can’t operations teams access AI insights directly?

AI platforms are designed for data scientists, not plant floor personnel, creating technical barriers through unfamiliar interfaces, authentication requirements, and tools that require specialized knowledge to use. dataPARC bridges this gap by surfacing AI outputs in the familiar trending and dashboard interfaces that operations already use daily. - How does dataPARC connect to AI platforms?

There are a variety of ways dataPARC can connect to the AI data, depending on the system. SQL connections, flat files, or via the dataPARC historian are just some potential ways to connect to the third-party platform. - Do users need technical skills to access AI insights through dataPARC?

No, AI predictions appear as standard data tags in PARCview alongside temperature, pressure, and other process variables, requiring no special training or technical expertise. Users trend AI outputs, create dashboards, and set alerts using the same intuitive tools they already know.

Building The Smart Factory

A Guide to Technology and Software in Manufacturing for a Data-Drive Plant