LLMs in manufacturing are no longer a future concept; they’re practical tools engineers are using right now to accelerate development. Across industrial sites, countless ideas for dashboards, workflows, and automations get stuck behind coding barriers. Large Language Models change that by turning plain-language requests into working code, helping teams move from concept to implementation far faster.

Why LLMs are A Turning Point for Industrial Software Development

Manufacturing has traditionally relied on specialized developers or IT teams to build custom dashboards, connectors, and automation workflows. This created long lead times and limited the number of people who could translate operational knowledge into working tools.

LLMs shift that balance. They allow engineers, operators, and domain experts to directly generate visualization logic, automation scripts, and integration code using natural language. The people who best understand the process no longer need to wait for development cycles; they can prototype, test, and refine ideas themselves.

This changes the pace of problem-solving. Routine tasks like report generation, data parsing, dashboard logic, or conditional displays can be built instantly. More importantly, complex tasks such as API integrations, multi-step workflows, and event-driven applications move from “someday projects” to “same-day prototypes.”

How LLMs Collapse the Time Between Idea and Implementation

In industrial operations, engineers constantly identify opportunities to automate tasks, streamline troubleshooting, and improve visibility. Yet many of these ideas stall because implementation requires custom code or IT support. Large Language Models (LLMs) are changing that by bridging the gap between domain expertise and software development.

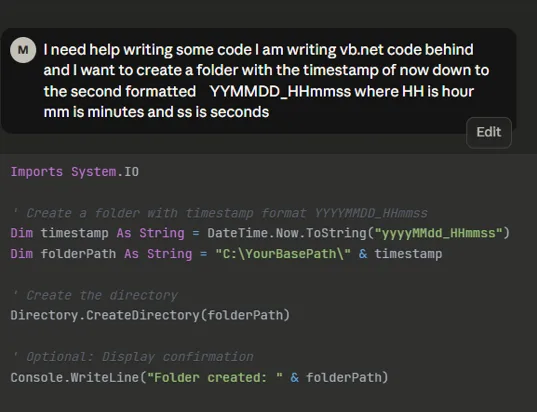

LLMs are AI systems trained to understand and generate language, including programming languages. Instead of writing hundreds of lines of VB.NET or XAML, users can describe what they need in plain terms: “Create a dashboard that filters by product and color-codes tanks out of spec” or “Build a workflow that sends a daily downtime report.” The model interprets those requests and returns working code snippets that can be inserted directly into a system, such as dataPARC’s Graphics Designer or Task Workflows.

With automated workflows, graphics can be emailed on a regular basis or when an alarm is triggered. Code for this can be written with the help of LLMs if users are not familiar with the syntax.

This enables engineers to move from concept to functional tools in hours rather than days. More importantly, it lets teams focus on process logic and visualization, what they know best, instead of syntax and framework details.

However, with this power comes responsibility. Because LLMs operate through online or third-party systems, users must handle data carefully:

- Never include sensitive information such as authentication keys, IP addresses, or tag names in prompts.

- Use placeholders (for example, “###PLANT_SERVER###”) when referencing internal systems or credentials.

- Validate all generated code before deploying it in production. Even well-intentioned scripts can introduce security or performance issues if not reviewed.

- Collaborate with IT when enabling additional database or API connections to maintain compliance and network safety.

When applied responsibly, LLMs allow process engineers to rapidly prototype new ideas, reduce dependency on scarce programming resources, and improve overall plant agility. All while maintaining the security and governance standards required in industrial environments.

A quick note on LLM usage: Because engineers are using third-party tools like ChatGPT or Claude to generate code, you don’t need a fully modernized data foundation to begin experimenting. LLMs can deliver immediate value by helping teams speed up development, streamline workflows, and prototype new ideas today, even before broader AI infrastructure is in place.

The Visualization and Automation Layer That LLMs Build On

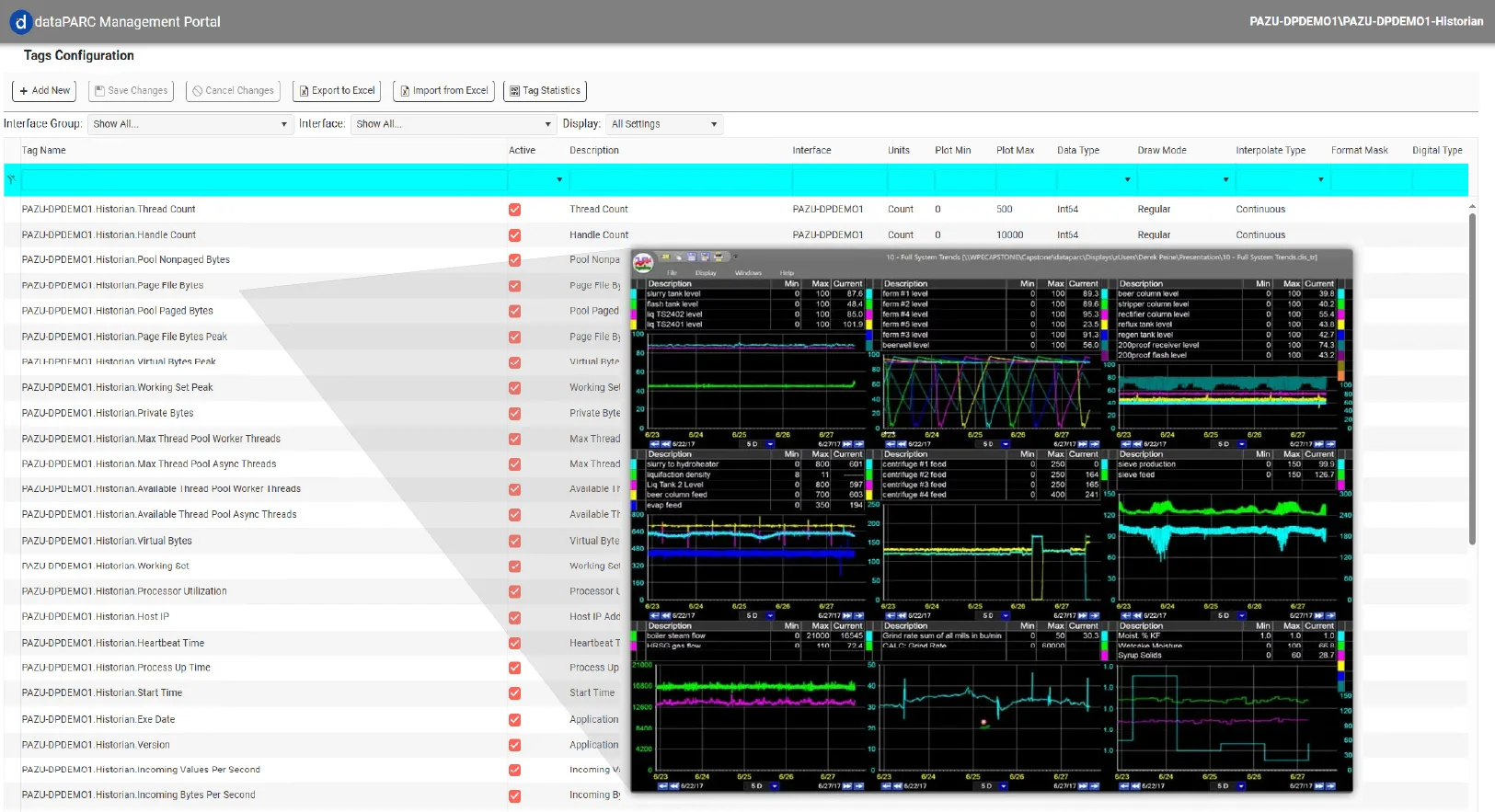

To dive into a specific example, let’s take a closer look at the dataPARC toolset. At the core of dataPARC’s visualization environment is the Graphics Designer, and there is also a workflow designer for creating automations. The graphics designers allow engineers to move beyond static dashboards and into the realm of interactive, automated applications. While the Workflow designer enables users to create automates, like saving data or sending emails.

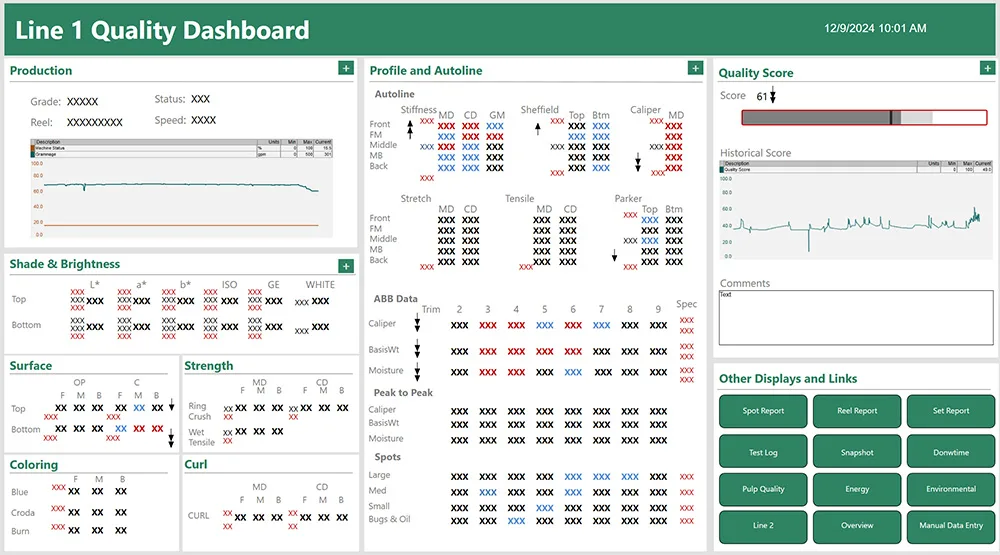

Graphics Designer is where operators and engineers build real-time, interactive dashboards. At its simplest, it lets users drag and drop tags to visualize process data through gauges, trends, or KPIs. But the tool’s real power lies in its flexibility. With embedded scripting, users can add conditional logic, visibility toggles, navigation buttons, or event-driven interactions. A dashboard can become a troubleshooting workspace, highlighting out-of-spec conditions, linking to related trends, or triggering a workflow when a limit is reached.

Graphics are a great place to display visual information, KPIs, and create interactive troubleshooting, all at the operator’s fingertips.

PARCtask Workflow complements this by handling the automation behind the scenes. It can run on schedules, respond to alarms, watch file directories, or collect data via APIs. Typical use cases include automatically generating reports, uploading lab results, syncing data between systems, or kicking off analyses when new information arrives.

When paired with LLMs, both tools become even more powerful. Instead of manually writing code, engineers can describe the desired function, such as “send an email with this chart every shift” or “color the pump icon red when flow drops below target”, and let the model generate the supporting VB.NET or XAML. This combination of intuitive visualization, automated workflows, and assisted coding allows teams to build fully customized operational tools that integrate deeply into existing systems without relying on external developers.

In short, Graphics Designer visualizes data, PARCtask automates actions, and LLMs accelerate creation, together forming a flexible, engineer-driven environment for advanced industrial applications.

Fast, scalable data historian at a fraction of the cost. Check out the dataPARC Historian.

How Engineers Communicate With LLMs to Build Production Tools

Getting useful results from an LLM depends on how you frame your request. Just like tuning a process control loop, clarity and precision in your inputs determine the quality of your outputs. When building or editing graphics and workflows, the most effective prompts include context about both the environment and the desired outcome.

Start by telling the model exactly what kind of application you are working on. For example, with dataPARC graphics:

“I’m creating a WPF application with VB.NET code behind.”

This ensures the model generates code compatible with your software’s structure and syntax. Without that context, the model might default to another language, producing code that won’t compile.

Once the environment is defined, describe the specific behavior you want in clear, action-oriented language. For instance:

“Create a button that runs a report when clicked and displays a confirmation message once the process completes.”

Avoid broad or ambiguous requests like “Write code for a report button”, which leave too much room for interpretation.

When troubleshooting, provide both the error message and a brief description of what the code should do. This helps the LLM focus on the relevant fix rather than unnecessarily rewriting large sections.

A sample of some simple code requested from an LLM and the resulting code.

Engineers have also found it helpful to include “soft constraints” in their prompts. Examples include:

- “Use multi-threading only if it improves user experience.”

- “Avoid interpolated strings or dollar signs in VB.NET.”

- “Assume all objects live within a canvas.”

These small additions prevent common syntax issues and keep the generated code aligned with dataPARC conventions. When working with other systems, you will need to find what syntax works best and include those constraints when writing prompts to the LLM.

Lastly, effective prompting is an iterative process. Build in short cycles, generate, test, debug, and refine. Treat the LLM as a coding assistant rather than an infallible source. With practice, engineers can reduce what used to be multi-day scripting tasks into a few structured conversations and rapid test cycles.

Where LLMs Are Already Transforming Engineering Workflows

1. Connecting to Cloud APIs for External Data

Engineers across industries are already using LLMs to streamline development, automate data movement, and improve visibility. Below are several practical examples that show how large language models can turn complex automation or visualization tasks into fast, repeatable solutions.

Many sites receive valuable data from external systems, like vendor equipment skids, environmental monitors, or energy sensors, but struggle to bring that information into their historian. By using an LLM to generate a VB.NET connector, engineers can quickly build a secure interface to a vendor API.

Once the connection logic is in place, a workflow can periodically pull the latest readings, save them to a flat file, and insert the data into the dataPARC historian for trending and reporting.

The result: external cloud data appears alongside process tags with no manual downloads or spreadsheets required.

2. Automating Email and File Ingestion

Some departments still rely on vendor emails for reports, pricing updates, or lab results. Rather than manually opening attachments, an engineer used an LLM to write a workflow that connects to Office 365 through its documented API, checks an inbox for unread messages with attachments, downloads them, and saves the files locally.

A companion task parses those attachments and loads the contents into the historian or an MDE table. This automation turns what used to be a manual, error-prone routine into a reliable, scheduled task that maintains data continuity without user intervention.

3. Product Lookup Dashboards

By combining an LLM-generated SQL query with graphics, one mill built a product lookup dashboard that returns all test results tied to a specific product ID. Users simply type the ID into a text box and click search; the display instantly retrieves process and manual data from multiple tables, displaying numeric, text, and timestamp values side by side.

This replaced a manual process of exporting lab data and aligning timestamps in Excel, saving hours of analysis time per shift.

4. Downtime Image and Documentation Tracker

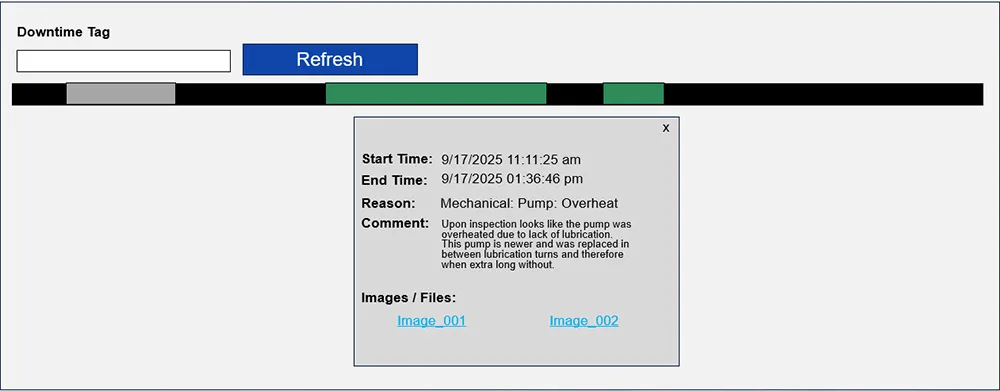

Another site used an LLM to help build a visual downtime timeline that associates photos or documents with each event. When a downtime alarm triggers, a workflow automatically creates a folder named with the event’s start time. Operators can upload photos, inspection sheets, or repair notes directly to that folder.

This was the inspiration or sketch for the downtime image graphic. It is helpful to draw or think out what you want the graphic to look like before trying to tell the LLM, so there is a clear, concise message.

The corresponding graphic then displays each downtime as a color-coded box, green if images exist, gray if not. Then, clicking on the box reveals event details and attached files. This feature gave engineers an easy way to tie visual evidence and work documentation to the historian record, improving root-cause analysis and knowledge sharing.

Takeaways from LLMs in Manufacturing

Large Language Models are reshaping how engineers approach data visualization and automation. Instead of relying solely on IT or dedicated programmers, process experts can now use their own domain knowledge to build custom tools directly within dataPARC.

By describing a problem or desired behavior in natural language, an engineer can rapidly prototype a workflow, dashboard, or troubleshooting display. Combined with dataPARC’s flexible environment, Graphics for visualization, and PARCtask for automation. LLMs remove traditional barriers to innovation and accelerate the move from idea to implementation.

However, with that accessibility comes responsibility. Engineers must maintain proper security and governance practices, ensuring that no proprietary data, authentication credentials, or internal network details are ever shared with online models. Every piece of generated code should be reviewed and tested before deployment.

When used thoughtfully, LLMs become a powerful extension of an engineer’s toolkit:

- Faster development of new dashboards and workflows.

- Easier iteration and troubleshooting with conversational debugging.

- Expanded creativity without requiring full-time coding expertise.

- Secure, contextual deployment within the existing historian environment.

As plants continue moving toward smarter operations and AI integration, this collaboration between LLMs and engineers represents the next evolution of data-driven manufacturing, one where innovation happens directly on the plant floor.

FAQ of Using LLMs in Manufacturing

- What are Large Language Models (LLMs) and how do they apply to manufacturing?

LLMs are AI systems trained to understand and generate human language, including structured formats like programming code. In manufacturing, they can assist engineers by translating plain-language requests, such as automating a workflow or creating a new display, into working VB.NET or XAML code for use in tools like dataPARC Graphics or PARCtask. - Do I need to know how to code to use an LLM effectively?

Not necessarily. While understanding the basics of logic and syntax helps, LLMs allow non-programmers to describe what they want in natural language. Engineers can focus on process goals while the model provides the technical implementation, which can then be reviewed and tested before deployment. - How can LLMs improve development speed inside dataPARC?

LLMs remove the bottleneck of manual scripting. Engineers can generate working code within minutes, iterate through testing, and refine designs faster than traditional development cycles. This accelerates dashboard creation, workflow automation, and integration with external systems. - Are there security risks in using LLMs for industrial applications?

Yes, and awareness is key. Users should never share sensitive data, such as IP addresses, authentication tokens, or tag names, in LLM prompts. All generated code must be reviewed for safety and tested in a controlled environment before production use. The best practice is to treat LLMs as external tools and maintain existing corporate IT security standards. - What’s the long-term benefit of using LLMs in industrial analytics?

LLMs lower the barrier between engineering expertise and implementation. They make it easier to experiment with automation, connect systems, and visualize complex data, helping manufacturers evolve from manual analysis to more agile, AI-ready operations.